About Me

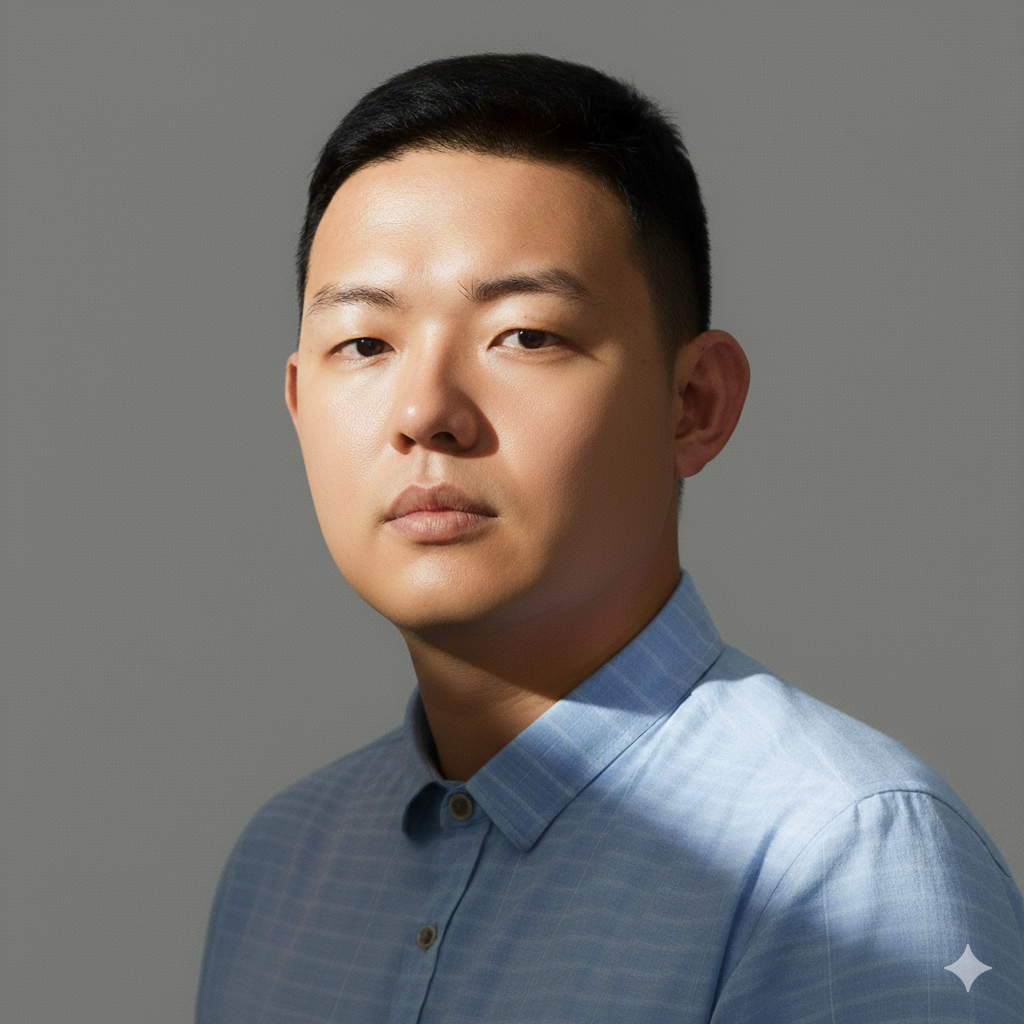

Howdy! My name is Yufeng Yang (杨钰峰 ,pronounced as u-feng iang). I’m a third year PhD student at CSE department, Texas A&M University, advised by Prof. Yi Zhou. I leverage foundational ML principles to address the computational and algorithmic challenges arising from large-scale machine learning. My current and prospective research focuses on:

Distributionally Robust Optimization for ML: Since the beginning of my Ph.D., my research has been driven by the challenges of distribution shifts in large-scale datasets. Specifically, I focus on ML training paradigms subject to distributional drift, utilizing optimization theory to convert loss formulations under uncertainty into tractable, solvable problems. I am particularly interested in bridging this framework to training scenarios such as adversarial training, multi-task learning, Reinforcement Learning and LLM pre/post-training.

Efficient first/zeroth-order Optimization Algorithm: Modern modeling techniques—including sequential modeling (RNN and SSM variants), multi-modal learning, and on-policy RL often result in ill-conditioned geometric loss landscapes and heavy-tailed representation distributions. My research focuses on designing efficient first and zeroth-order optimization algorithms for these complex formulations, including bi-level, compositional, and contextual dependent objectives. I am dedicated to exploring relationship between problem-dependent parameters, algorithmic structures (such as learning rate scheduling, normalization/clipping, variance reduction, momentum/extrapolation, pre-conditioning etc) and the resulting convergence performance.

Furthermore, I am interested in emerging topics such as system-aware optimization and RL algorithms tailored for large-scale GPU clusters. My goal is to bridge foundational theory with AI infrastructure to maximize system-level performance, alongside exploring the mechanistic interpretability of LLMs.

(Open for Collaboration) If you believe our research interests align and want to collaborate with me. Feel free to drop me an email at ynyang94@tamu.edu or add me on WeChat: ynyang94 (please indicate your purpose when connecting).

I’m actively looking for AI research scientist/engineer position internship starting at 26/27 summer. Here is my brief CV.

News

📄Papers

Nested SGD for Sinkhorn distance-regularized Distributionally Robust Optimization[arXiv], [code],Submitted; [short version] accepted at OPT workshop, Neurips 2024 [poster].

Adaptive Gradient Normalization and Independent Sampling for (Stochastic) Generalized-Smooth Optimization[arxiv], [code], [slides], TMLR.

📚Education

– Ph.D. in Computer Science, Texas A&M University, 2024-now

– Ph.D.(Transfer Out) in Electrical Engineering, University of Utah, 2023-2024

– M.S. in Computational Science and Engineering, Rice University, 2021-2023

– B.S. in Applied Math (SSE), Chinese University of Hong Kong(Shenzhen), 2017-2021

Prior to university, I grew up and finished my elementary education in Jiayuguan, a small town in Gansu Province, China, located near the western starting point of the Great Wall.

Academic Services

– Conference: AISTATS

– Journal Reviewer: IEEE TSP; IEEE TPAMI; Journal of Combinatorial Optimization;

– Workshop Reviewer: NeurIPS-OPT workshop

Teaching

– At Rice: ELEC241, Fundamentals of Electrical Engineering I (Role: Grader).